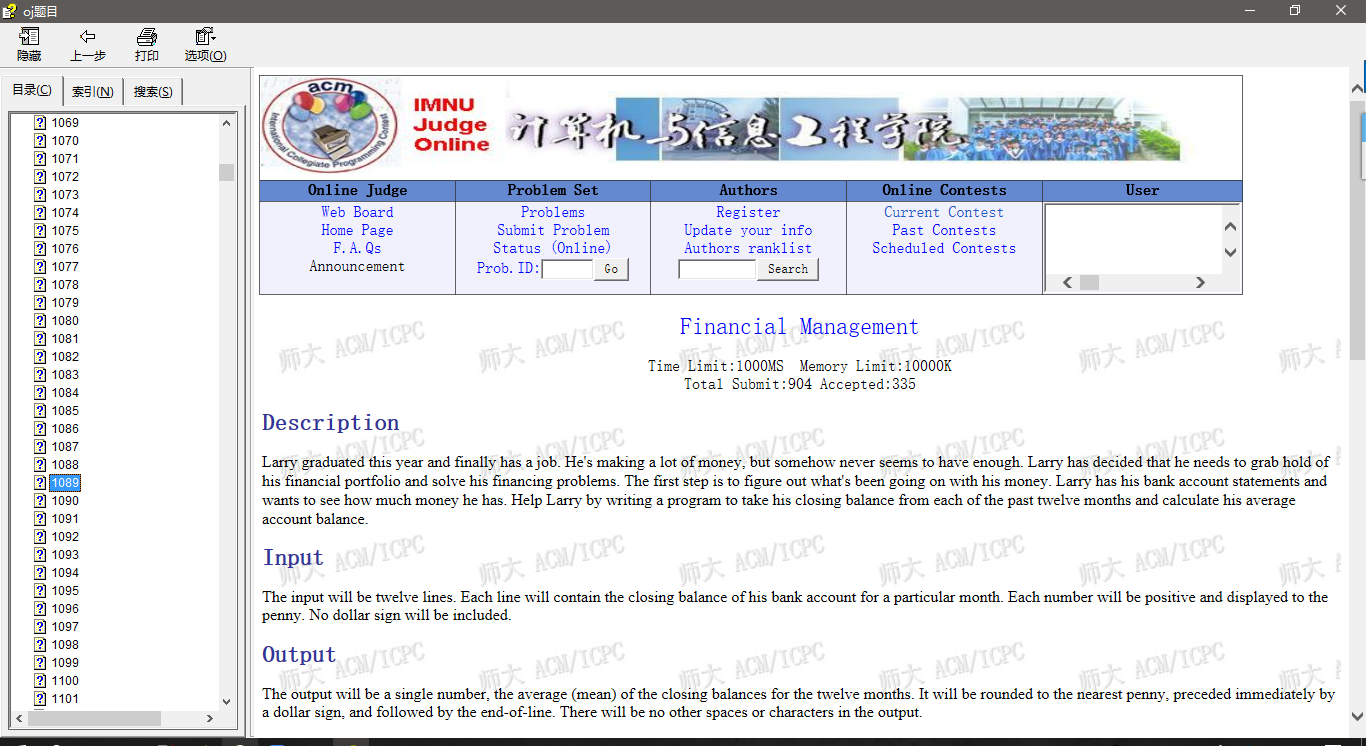

爬虫每日篇----使用爬虫多种姿势爬取学校OnlineJudge上的题目。学校的oj里有两千多道题,到访问的时候必须连校园网,在外面就看不到了,所以我想着把上面所有的题都弄下来,然后把所有题目集合到一起做一个.chm方便用的时候看。效果如下图。

学校OJ网站的配置很简单,普通的http协议,使用get请求就可以。直接上代码,有不懂的可以联系我的邮箱。

爬取1000-1050题号之间所有的题目

|

1 2 3 4 5 6 7 8 9 10 |

import urllib import urllib2 import string url = 'http://210.31.181.254/JudgeOnline/showproblem?problem_id=' for id in range(1000,1050): result = urllib2.urlopen(url+str(id)) f = open('test/'+str(id)+'.html','w') f.write(result.read()) f.close() |

## 爬取对应账号做过的所有题目

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

import urllib import urllib2 import string import re url = 'http://210.31.181.254/JudgeOnline/showproblem?problem_id=' login_url = 'http://210.31.181.254/JudgeOnline/login?action=login' problem_list_url = 'http://210.31.181.254/JudgeOnline/problemlist' user_url = 'http://210.31.181.254/JudgeOnline/userstatus?user_id=' user_id = 0; data = urllib.urlencode({ 'user_id1':'2015xxxxxx', 'password1':'xxxxxx', 'B1':'login' }) user_agent = 'Mozilla/4.0 (compatible; MSIE 5.5; Windows NT)' headers = {'User-Agent':user_agent} request = urllib2.Request(login_url,data,headers) response = urllib2.urlopen(request) print response.read() user_id = 2015110; result = urllib2.urlopen(user_url+str(user_id)).read() result = re.findall('problem_id=?([\d][\d][\d][\d])',result) for id in result: problem = urllib2.urlopen(url+id) f = open('test/'+id+'.html','w') f.write(problem.read()) f.close() # <a href.*problem_id=.*> # f = open('thefile.txt','w') # f.write(result.) # f.close() print result |

下面是效果图